AI has created a lot of opportunity to improve people’s lives, but also raised questions about what is the best way for the AI systems to serve people.

- Fairness

- Fair model output to diverse of users and use cases

- Solution is available to all users

- Suggest multiple answers to the same question and measure the model the top-k answers

- Use multiple metrics rather than a single one

- Explainability

- Understand how and why the AI system makes a decision

- Help with fairness

- Google Explainability White Paper

- Privacy

- Model trained with sensitive information or PII (Personally identifiable information) should safeguard this informatio

- Aggregate and anonymize the sensitive data

- Design the features with disclosure built-in

- Legal compliance

- GDPR (General Data Protection Regulation)

- EU

- Give individual control over their data

- Companies should protect the data of employees and customers

- The data subject has the right to revoke their consent at any time

- CCPA (California Consumer Privacy Act)

- Similar to GDPR

- Consumers have the right to know what personal data is collected about them and whether the data is sold or disclosed to whom

- Users can access the data that the company has for them, and block the sale of their data and request a business to delete their data

- GDPR (General Data Protection Regulation)

- Anonymization – remove PII

- Irreversible

- Impossible to identify the person

- Impossible to derive insight or discrete information even by the party responsible for the anonymization

- Pseudonymization

- Reversible

- Possible to identify the person if the right information is included

- Data masking, encryption, tokenization

- Security

- Identify threads to the AI system from malicious intent

- Harms: informational and behavioral

- Defenses

- Cryptography

- SMPC – Secured Multi-Party Computation

- Allow multiple systems collaborate to train/serve a model

- Keep the data secured with shared secrets

- FHE – Full Homomorphic Encryption

- Train on encrypted data without decrypting it first

- Send encrypted request and receive encrypted result

- Very computationally expensive currently

- SMPC – Secured Multi-Party Computation

- Differential Privacy

- Provide provable guarantee of privacy

- Methods

- DP-SGD (Differentially Private Stochastic Gradient Descent)

- Eliminate the possibility of extract private information from the weights of the model

- Apply noise to mini-batch

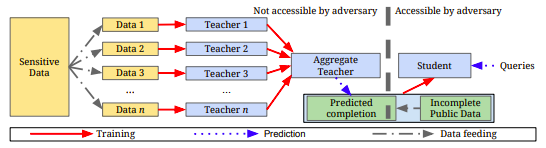

- PATE (Private Aggregation of Teacher Ensemble)

- Divide sensitive data into K partitions without overlap

- Train K models as teacher models

- Aggregate K models into one teacher model and add noise to the result

- Create a student model by training on the teacher prediction

- Only student model is accessible by users (including attackers)

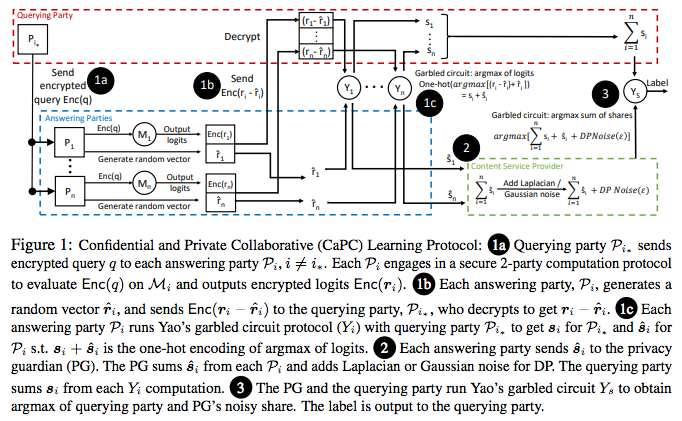

- CaPC (Confidential and Private Collaborative learning)

- Uses multiple cryptographic building blocks for multiple parties to training/serve together without directly sharing raw data

- Uses HE (Homomorphic Encryption)

- Uses PATE

- Ex: hospitals want to collaborate to improve the model prediction without sharing sensitive data

- DP-SGD (Differentially Private Stochastic Gradient Descent)

- Cryptography