In hard to keep track of all the various reinforcement learning terminologies. Often I forget the name of some of these algorithms, so I made this list to give an overview to serve as a reminder.

Prediction vs Control

- Prediction: calculate/estimate the consequence of an action

- Estimate value function of state or state-action

- Policy evaluation is also a kind of prediction

- Control: finding a policy to take action

On-policy vs Off-policy

- On-policy: Update Q value of the states that are visited as chosen by the policy

- Off-policy: Update Q value of the states that are not chosen by the policy

Model-based vs Model-free

- Model-based: there is a model that predict the next state transition probability and the reward probability

- The agent has access to the model of the environment

- The agent has an approximation model of the environment

- Model-free

- Does not use transition probabilities or reward distribution

- Directly model the Q values as a function of state and action

Planning vs Learning

- Planing: If the agent has a model of the environment (model-based), it can “think” about making moves without actually taking action

- To create or improve a policy without taking action

- Learning: To learn the value function and policy without a model (model-free)

Direct RL vs Indirect RL

- Direct: directly improve the value function and the policy without a model (model-free)

- Indirect: improve the value function and the policy via the model (model-based)

Value-Iteration vs Policy-Iteration

- Value iteration

- Finding optimal value function, and then extract the optimal policy directly

- There is no repeat of the two because once the value function is optimal, then the policy out of it should also be optimal (i.e. converged) by choosing the optimal action based on the optimal value function

- Policy iteration

- Compute the optimal policy by iteratively finding the value function corresponding to a given policy and then improving that policy.

- Policy evaluation + policy improvement, and the two are repeated iteratively until policy converges

- Makes use of transition probabilities and reward function of the MDP (model-based, need access to a model)

They are both types of Generalized Policy Iteration (GPI), where policy and value function are both improved turn by turn.

Tabular vs functional approximation

- Tabular: problems in which the state and actions spaces are small enough for approximate value functions to be represented as arrays and tables

- Functional approximation: use approximate function to estimate the true value function

- When the state space becomes too large, the tabular methods become insufficient and unsuitable.

- Use these set of features to generalize the estimation of the value at states that have similar features.

- Typically achieve faster computation and much more generalizations.

Online vs episodic

- Online: value-function or policy can be updated or learned at each time step

- Episodic: value-function or policy is updated only at the end of an episode

Monte Carlo Methods

- A broad class of algorithms that is also popular outside of the reinforcement learning world

- Sample many random path/trajectories

- Update the value function only once when the full trajectory (episode) is done

Off-policy Monte-Carlo

- Estimate the value function with respect to a target policy

- Off-policy: use experience from some different behavior policy

- Monte-Carlo: the targets are empirically observed returns by waiting til the end of episodes

Exploring start Monte-Carlo

- A type of control method that help to estimate the Q function to find better policy

- In contrast, Monte-Carlo is usually used for a prediction setting

- The exploring part makes this is a policy (control method)

- Guarantees all state-action pairs are visited by starting a simulation at a random state and taking a random action from that state to ensure all state-action pairs have nonzero probability of being selected from the start

TD – Temporal Difference Learning

- A broad class of learning methods

- Learn by bootstrapping the current estimated value function

- Difference from Monte-Carlo: TD adjusts predictions to match later, more accurate, predictions about the future before the final outcome is known

- The difference refers to the difference between the target and the current value

- Target – something that is based on the reward with some adjustment of the (estimated) value of the next state

- Current value – the value calculated by the current value function

Dynamic Programming Methods

- More well known as an general computer programming method

- Use recursive relationship of the Bellman Equation to solve a bigger problem

TD-lambda

- An extension to the Temporal Difference Learning method

- Lambda refers to the trace decay parameter (larger meaning longer lasting effect, so giving more credit of a reward to more distant states)

Degree of bootstrapping

- Computation efficiency of DP

- Data efficiency of TD

- Introduces bias in asymptotic performance

- Controlled by lambda of TD-lambda: 0 means full bootstrapping, 1 means no bootstrapping

TD(0) – aka one-step TD

- TD with lambda = 0 (full bootstrapping)

- One-step TD, only look ahead one step (in contrast with Monte Carlo)

- Note the value function evaluated with S-prime (next state)

- Target is estimated for two reasons

- Using samples (just like Monte Carlo)

- Using bootstrapping of the current value

SARSA – State, Action, Reward, State, Action

- A control method (not a prediction method)

- Model free – it does not track a model to predict the next state or reward from the environment, but rather just use its experience

- A TD control method (it is a type of TD methods)

- On-policy method – it learns from the action chosen by the policy

- The Q value that gets updated depends on the action chosen by the policy

- Goal is to learn the Q function

- The key point is the sample Q(next state, next action) is used to calculate the error for updating the Q value

- This form of update is not gradient based because this error term is not the gradient of any value function

- More suitable for episodic task because of the discounted reward

Expected SARSA

- Very similar to SARSA

- Also a TD control method

- Also model-free (Note that Q function is modeled directly from experience without transition probabilities)

- Instead of using the sample next state-action, it average over all actions from the policy

- Can be either on-policy or off-policy

- If the expected value is taken over the action distribution from the policy, then on-policy

- If the expected value is taken over a different distribution, then off-policy

Q-learning

- Q refers to the Q function: the expected rewards for an action taken in a given state

- Very similar to SARSA

- Also model free

- Also a TD method (in particular a type of TD(0) method)

- Difference is in the Q value update

- The key point is the maximum Q(next state, best action) is used to update the Q value

- This is off-policy learning – learns from actions that are outside the current policy (ie it learns the value of the optimal policy independently of the agent’s actions)

- It is a special case of expected SARSA because it’s a distribution with 100% on the greedy policy

- It cannot be on-policy because you have to use a different policy to explore the state-action space.

- Require exploration and a partly-random policy to ensure all states are explored to choose the best action

Target policy vs behavior policy

- Target policy: the one for which we are learning the value function

- Behavior policy: selects the action for the agent (for exploration)

Dyna-Q

- A planning algorithm

- Uses a model to learn from both simulated and real experience (model-based)

- From real experience: uses Q-learning to update the Q function (one-step tabular q-learning)

- From experiences: Indirect RL – update all Q(s,a) every time you query them from the memory (the model). You don’t have to revisit them with actions.

- Randomly sample previously visited states and actions

Dyna-Q+

- An extension of Dyna-Q

- Also model-based

- Encourage visiting states that haven’t been tried for a long time

- Handle non-stationary environment better than Dyna-Q

- since there is a greater chance that the dynamics for that actions might have changed (non-stationary)

Policy Gradient

- Changes in the parameters of a policy can affect the return of a policy from a specific starting state

- The gradient is on the return (total reward) with respect to the parameters

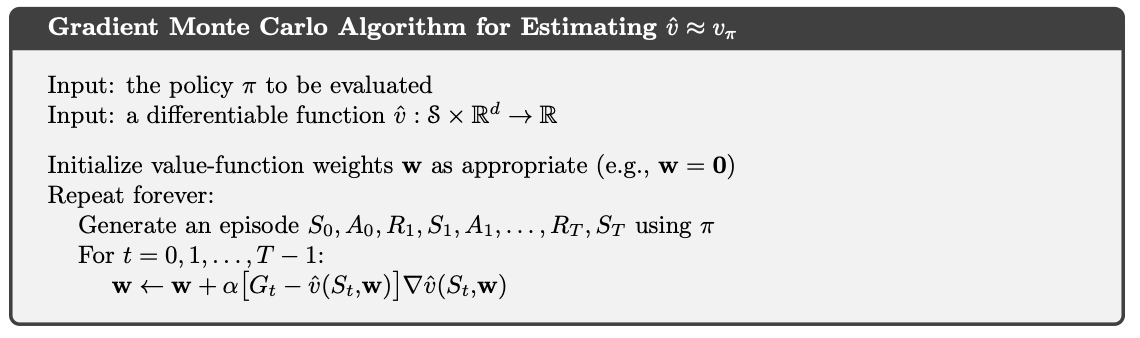

Gradient Monte Carlo

- Produces true gradients (unbiased) of the parameters w of the value-function

- Guarantee to find local optimal in prediction setting (estimating value-function)

Semi-Gradient Methods

- Use bootstrapping estimate of value function

- Target is n-step return of the current value function or a DP target, but they depend on w in all case

- Causes bias in the target return because depending on w (bootstrapped)

- Gradient: the gradient of the value function with respect to the parameter w

- Semi: refers to the bias means such gradient is a part of the true gradient

Semi-gradient TD(0) for estimation

- Semi-gradient: gradient is biased because the target return is bootstrapped from the current value-function

- TD(0): the one step

- Estimation: learn the value function (not a control method)

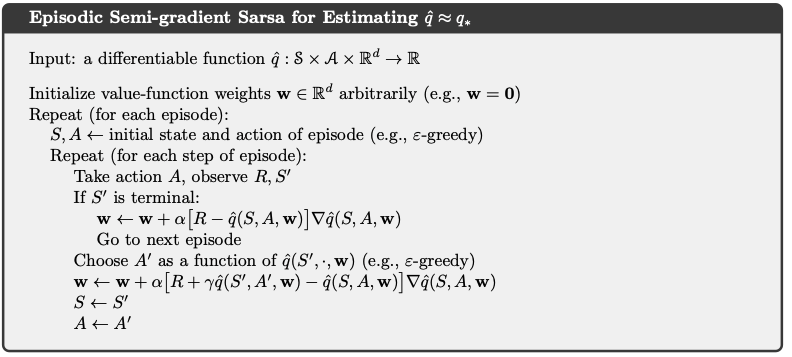

Episodic Semi-Gradient SARSA for estimation

- An extension of the Semi-Gradient method

- SARSA: the fact that it uses next state-action to update the current state action

- But the value-function is updated via the parameter w

- We update w using semi-gradient method because of the bootstrapping nature

Differential Semi-Gradient SARSA

- In Average reward setting

- A control method

- Gradient: some kind of function approximation (ie linear) that parameterizes the value function with continuous parameters, so the gradient is about this parameter

- Differential: a differential version of TD error (delta)

- for continuing task we care about the differential return (differential value function) which is return – average reward

Softmax Policy vs Epsilon Greedy Policy

- Epsilon Greedy Policy: assign large probability to the top policy, and then equally distribute the reminding epsilon probability among the remaining suboptimal actions

- Softmax Policy: where the probability of action is by take the softmax function over the action preference vector. We cannot directly use the preference vector as the policy probability because the preference values do not represent a probability vector, so softmax is a just to normalize it. Softmax policy is also able to distinguish not-as-optimal action vs very bad action. There is a parameter tau called the temperature to adjust the degree of randomness. Higher value of tau moves the policy toward a uniform random policy.

- For numerical stability, we often subtract the action preference by the max value so that we are not exponentiating over very large values

Actor-Critic

- Actor: decides how to choose the best action (finds an optimal policy) based on the current estimated value function to improve the policy

- Critic: evaluate the return of the current policy

Gaussian Actor-Critic

- A type of actor-critic method where the parameters of the policy is Gaussian distributed (continuous, not discrete)

Average Reward Actor-Critic

- A type of actor-critic method for a continuing task, we need to use average reward per step

- Using average reward allows the algorithm to not depend on the discount factor

Differential Softmax Actor-Critic

- Uses functin approximation to parameterize a state-conditional categorical distribution over actions

- Discrete action space

- Differential: some differential version of TD error using the difference between current return and average return (suitable in continuing setting)

Reference: most of the material of this post comes from the book Reinforcement Learning

2 thoughts on “Reinforcement Learning Glossary”